Download your cheat sheet and checklist to start building content that works harder.

Large AI Overviews on SERPs are affecting visibility and causing a dramatic decrease in traffic.

Join us for a data-backed session where we break down how to detect, diagnose, and eliminate unnecessary branded ad spend.

Large AI Overviews on SERPs are affecting visibility and causing a dramatic decrease in traffic.

This template is your no-nonsense roadmap to a flexible, agile social media strategy.

Large AI Overviews on SERPs are affecting visibility and causing a dramatic decrease in traffic.

Google Search confirms it’s requiring JavaScript to block bots and scrapers, like SEO Tools

Google has made a change to how it’s search results are served which will also help to secure it against bots and scrapers. Whether this will have further effect on SEO Tools or if they can use a headless Chrome that uses JavaScript remains an open question at the moment but it’s likely that Google is using rate limiting to throttle how many pages can be requested within a set period of time.

Ryan Jones’ (LinkedIn profile) SERPrecon is back up and running, according to a tweet:

“Good news. We are back up and running. Thanks for bearing with us.”

SERPrecon enables users to compare search results against competitors over time as well as competitor comparisons using “vectors, machine learning and natural language processing.” Quite likely one of the more useful SEO tools available and reasonably priced, too.

Google quietly updated their search box to require all users, including bots, to have JavaScript turned on when searching.

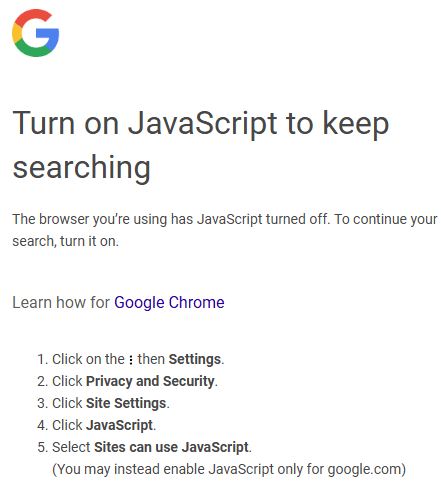

Surfing Google Search without JavaScript turned on results in the following message:

Turn on JavaScript to keep searching

The browser you’re using has JavaScript turned off. To continue your search, turn it on.

In an email to TechCrunch a Google spokesperson shared the following details:

“Enabling JavaScript allows us to better protect our services and users from bots and evolving forms of abuse and spam, …and to provide the most relevant and up-to-date information.”

JavaScript possibly enables personalization in the search experience, which is what that spokesperson may mean by providing the most relevant information. But JavaScript can also be used for blocking bots.

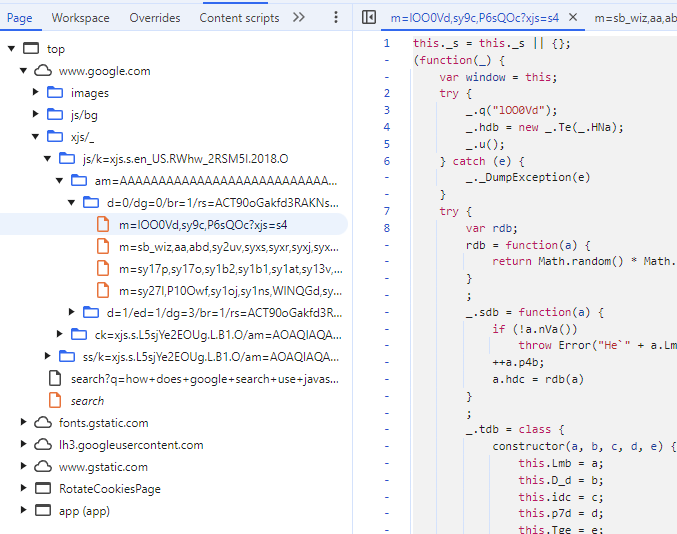

Using the latest version of Chrome I copied some of the JavaScript via Chrome Dev Tools and ran it through ChatGPT to ask what it does. One part of it may relate to restricting abusive requests for documents.

ChatGPT gave me the following feedback:

“Core Functionalities

Randomized Value Generation (rdb)

Generates a random value based on properties (D_d, idc, and p4b) of the input object a, constrained by p7d.

This may be used for rate-limiting, exponential backoff, or similar logic.

Purpose and Context

From its components, the script:

Likely handles request retries or access control for web resources.

Implements a policy enforcement system, where:

Policies determine if requests are valid.

Errors are logged and sometimes retried based on rules.

Randomized delays or limits might control the retry mechanism.

Appears optimized for error handling and resilience in distributed or high-traffic systems, possibly within a Google service or API.”

ChatGPT said that the code may use rate-limiting which is a way to limit the number of actions a user or a system can take within a specific time period.

Used to enforce a limit on the number of actions (e.g., API requests) a user or system can perform within a specific time frame.

In this code, the random values generated by rdb could be used to introduce variability in when or how often requests are allowed, helping to manage traffic effectively.

ChatGPT explained that exponential backoff is a way to limit the amount of retries for a failed action a user or system is allowed to make. The time period between retries for a failed action increases exponentially.

ChatGPT explained that random value generation could be used to manage access to resources to prevent abusive requests.

I don’t know for certain that this is what that specific JavaScript is doing, that’s what ChatGPT explained and it definitely matches the information that Google shared that they are using JavaScript as part of their strategy for blocking bots.

Semrush Says It was Never Affected

Semrush commented on a LinkedIn discussion that a delay that was seen was due to maintenance and didn’t have anything to do with Google’s JavaScript requirement.

They wrote:

“Hi Natalia Witczyk, the delay you saw yesterday was due to general maintenance within our Position Tracking tool, we are not experiencing any issues related to the event with Google but will continue to monitor the situation. We’d recommend refreshing your project, if you are still having issues please send us a DM or reach out to our support…”

One of the observations made by search marketers across social media is that dealing with the blocks may cause an increase in resources for crawling which in turn may be passed on to users in the form of rate increases.

Vahan Petrosyan, Director of Technology at Search Engine Journal observed:

“Scraping Google with JavaScript requires more computing power. You often need a headless browser to render pages. That adds extra steps, and it increases hosting costs. The process is also slower because you have to wait for JavaScript to load. Google may detect such activity more easily, so it can be harder to avoid blocks. These factors make it expensive and complicated for SEO tools to simply “turn on” JavaScript.”

I have 25 years hands-on experience in SEO, evolving along with the search engines by keeping up with the latest …

Join 75,000+ Digital Leaders.

Learn how to connect search, AI, and PPC into one unstoppable strategy.

Join 75,000+ Digital Leaders.

Learn how to connect search, AI, and PPC into one unstoppable strategy.

Join 75,000+ Digital Leaders.

Learn how to connect search, AI, and PPC into one unstoppable strategy.

Join 75,000+ Digital Leaders.

Learn how to connect search, AI, and PPC into one unstoppable strategy.

In a world ruled by algorithms, SEJ brings timely, relevant information for SEOs, marketers, and entrepreneurs to optimize and grow their businesses — and careers.

Copyright © 2025 Search Engine Journal. All rights reserved. Published by Alpha Brand Media.

website SEOWebsite Traffic